After 7 days of dogfooding, the addition of a test suite, documentation, and 1 confirmed user, {paint} is now v0.1.0 #rstats

After 7 days of dogfooding, the addition of a test suite, documentation, and 1 confirmed user, {paint} is now v0.1.0 #rstats

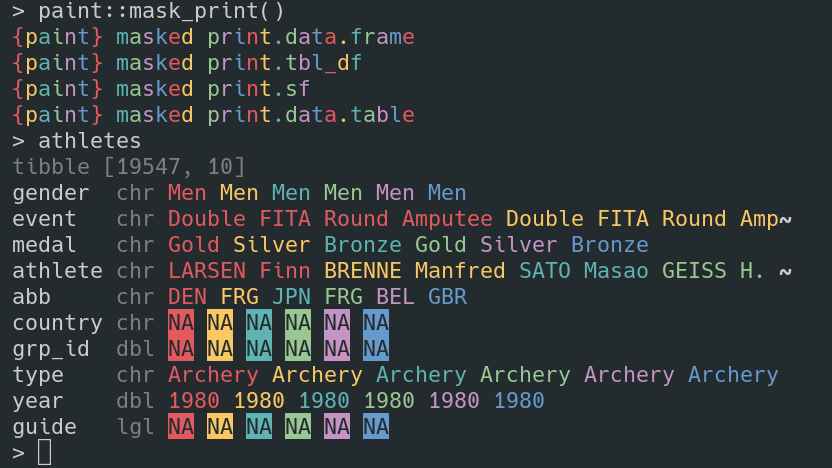

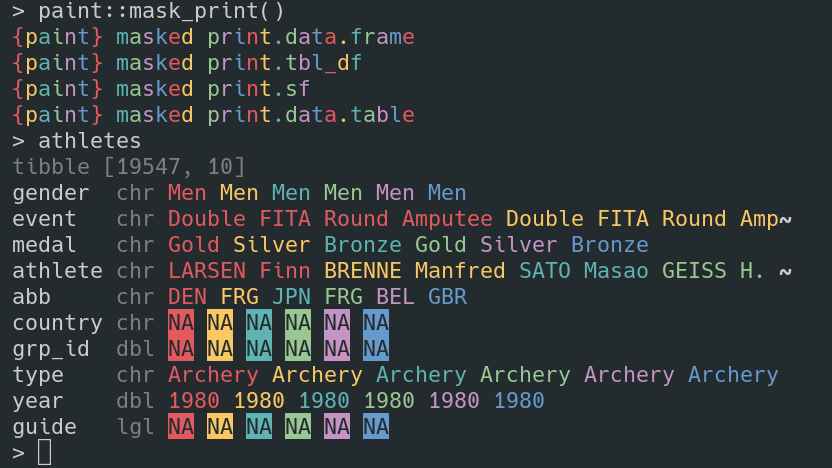

Happy Friday #rstats! This week’s diversion is alternative print() methods for data rectangles. Here’s {paint}, highly experimental, works on my machine stage:

Bit of a scraping project on that’s going to sip thousands of xml files over the next couple of days. Using #rstats {targets} dynamic branching each xml file is a cached target. The advantage of this is that if the process is interrupted at any time for any reason it can be resumed with a simple tar_make(). Niiiiiiice.

Happy Friday #rstats! Incited by @mdsumner {rmdocs} now provides replacements for utils::help and utils::?, so you never have to accidently look at HTML help again.

New {targets} addin: tflow::rs_load_current_editor_targets(). Load targets from cache that are referred to in the code you are looking at. #rstats

Happy Friday {rmdocs} now handles namespaced::symbols #rstats

If you run the addin with the cursor on withr::with_options you’ll get served some delicious Rmd help for that function, even if you had rlang::with_options masking it via library(rlang).

This week I cracked a problem that I’d been stewing on for a while: Fast generation of renv.lock files.

For those not in the know: These fully describe an R project’s package dependencies and can be used to create a “known good” package environment for the project to run in. You should definitely be using these! Typically these are created with {renv}.

I set myself a budget of 3 seconds to:

{renv}My thinking was that this amount of time is short enough to facilitate new workflows involving always-on automated lockfile generation. So instead of lockfile creation being a kind of manual discipline that is done interactively, it can become something that just automatically happens everytime you build a pipeline with {targets} or render a document with {rmarkdown}.

And that means people won’t forget to do it before they go on holiday, Murhpy’s law etc etc.

The generation time has to be really short because during the iteration cycle of an analysis you’re typically building a pipeline many many times in a single day. You may be adding or removing dependencies each time. Time spent waiting for things to build can rapidly become annoying, and that annoyance inspires hacks that undermine everything.

Anyway I’m happy to report success. capsule::capshot() can tick all the items I listed off in 1.5 - 2 seconds on my current project which is quite mature and laden with dependencies (~ 200 recursive deps). You give it paths to files containing your dependencies (typically a single file for me), and you get back a lockfile, built against the current .libPaths().

So you’ve likely never heard of {capsule} (although it does have its fans). It’s a kind of reimagining of the {renv} workflow for my team. It actually uses {renv} under the hood. The main point of difference is that it’s a lazy workflow. You don’t typically work out of a local library. You do that only when picking something up that’s been on the shelf for a long time, or putting something “into production” - i.e. running unsupervised somewhere.

The laziness has several advantages: You get no interaction with personal dev setups. RStudio, VSCode, Emacs, Addin packages etc… none of that needs to go anywhere near the lockfile. It’s also an easy sell. There’s 2 commands you absolutely need to know and they have obvious names: capsule::create() and capsule::run().

Some cool opportunities get opened up by the always-make-a-lockfile workflow. If we’re doing that, hopefully, we’re always committing it, and so it can become a mechanism to help nudge team-mates to keep their R libraries moving forward in step.

For example, your lockfile target could pass on building a new lockfile that would contain versions behind the current one, and send a warning to update pacakges. There’s actually machinery already in {capsule} for that, although I am still settling on the best design. I am excited to get a feel for the best practice for this kind of stuff over the next few weeks!

Due to one of my current projects, R developers have been sharing their frustrations with CRAN with me. There are many distrubing aspects to these stories, but one that is on loop in my brain at the moment is the systemic degradation CRAN policies are creating.

I think this degradation is slow and doesn’t impact too much on functionality, so it will be hard to spot at first. If there is a trend though, over time its corrosive nature will become sorely apparent. This is because developers have confessed to:

\donttest.It’s kind of sad to imagine what the cumulative effect looks like of developers being nudged away from creating thoroughly tested works with rich interconnected explanatory documentation. To me, it’s just an odd situation to be in: where R itself contains excellent tools for this, but our package infrastructure is having a potentially out-sized influence on the utilisation of those tools.

l33t Data scientists can write #julialang to write R. They can write #haskell to write R. They can write #clojure to write R. For a long time many have been writing a half-baked version of #rstats ported to #python. Life’s a bit simpler if you Just. Write. R. Though?

Just say no to !important

Subtweeting an out of control hairy yak that has raised 2 gh issues, 1 stack overflow question, and 1 package update, and eaten most of my day.