This is a post I’ve been meaning to write for some time now about {capsule}.

{capsule} provides alternative workflows to {renv} for establishing and working with controlled package libraries in R. It also uses an renv.lock so it is compatible with {renv} - you can switch between doing things the {capsule} way and the {renv} or vice versa at any time.

Introducing an R package I wrote nearly three years ago

Carefully curating a controlled package environment the {renv} way can be kind of a chore. You have to init the {renv} and then be very diligent in what you install into the project library - because the project library will be “shapshotted” to create the renv.lock. {renv} provides tools to help you keep the library nice and pruned. But you have to remember to use them. And there are some challenging edge cases - particularly around ‘dev’ packages or packages that play no role in the project but aid the process of developing it.

It’s not that the {renv} way is wrong - it’s clearly not - because it’s pretty much how other languages do it, but other languages are different from R and have different developer cultures. Importantly I think R users are much more prone to interactive REPL driven development and this, in turn, lends itself to the use of interactive development tools like: {datapasta}, {ggannotate}, {esquisse} as well as other more traditional dev tools like {styler}, {lintr}. If you use VSCode you will also have packages that support the extension experience like {languageserver} and {httpgd}.

You can install these packages into the project library and by default {renv} won’t snapshot them - but then you have to go make sure your dev dependencies are up to date each time you context switch to a new project - boring! They also contaminate the project library in subtle ways: installing a new dev package might force an update of a controlled package that would have otherwise been unnecessary - should that change be reflected in the lockfile?

It all just feels a bit hard for something tangential to getting the actual work done. The hardness of keeping a clean lockfile magnifies when you have a whole team having at it with all their personal dependencies. In my case at one time we had 3 distinct text editor / IDE workflows being used within the team, not to mention each person’s preferences for RStudio addins. Some people are more onboard with putting up with some added resistance in their dev workflow than others - again the dev culture thing plays an important role as to how functional or otherwise this setup is.

Added resistance can also show up elsewhere like the time to install the local project library. And where this hurts is that this added resistance created by {renv} makes people less likely to use it. “Aww this is just an ad hoc thing, I don’t wanna jump through the hoops”. We all know by now what happens to “ad hoc things” in Data Science Land.

{capsule} provides some new lockfile workflows that are much lazier and therefore perhaps a bit easier to get adopted as standard practice for a team.

The laziest possible workflow

You’ve created a pipeline and it’s spat out the output you wanted. You didn’t use a project library to do it. You can add an renv.lock based on the project dependencies and versions in your main library with a one-liner capsule::capshot(). You commit that lockfile because one day you might need it, but you didn’t have any added resistance to your dev workflow!

In the future if you do need to run the project against the old package versions you can fire off: capsule::run(targets::tar_make()) or capsule::run(source("main.R")) or capsule::run(rmarkdown::render("report.Rmd")) etc. This will create the local project library from the lockfile and run the R command you specified against that local library. You don’t get switched into the {renv} library - so you’re not immediately flying blind without your dev tools!

Ahah but what if there’s a bug you say?! You can temporarily switch your REPL over to an R session running aginst the project library with capule::repl(). You won’t have your dev tools in this REPL though, so it may be worth doing renv::init() and going full {renv} with your dev tools installed. It’s your choice and you only pay that price if you need to.

A workflow for the whingers

Here’s a scenario that might be familiar: you have a team of people rapidly smashing out a project together. Maybe it’s a Shiny app. Someone is building the datasets, someone is wiring up the GUI, someone is making the visualisations etc. With many people contributing to an early stage project there is really no such thing as a ‘controlled package environment’.

Often issues encountered on the project force changes to internal infrastructure packages, so these are constantly updated. New packages flow into the project - particularly thanks to the GUI and vis people - they’re just going wild trying to make things look great. You all need to stay synced up with packages otherwise every time you pull changes the thing darn thing isn’t going to run.

This kind of situation is a strong argument for {renv} due to the need to stay in sync to be able run the project. However, if we slightly loosen the meaning of ‘in sync’ we can get away without the resistance of full {renv}. What I mean is: We probably don’t need to all have the exact same package versions. Over a short space of time, the culture of R developers is that packages are backward compatible with prior versions. So it’s probably fine if people are ahead of the lockfile, but less good if they fall behind.

With {capsule} we can have a workflow where we use a lockfile, but no project local library to keep us all as loosely in sync. If someone makes an important change to a package that is a dependency (or one comes down the pipe externally) we definitely need to call capsule::capshot() and commit a new lockfile. But if someone installs the latest version of {ggplot2} the day it drops because they happened not to have it, or they’re into bleeding edge - meh, we probably don’t need to update the lockfile. It’s fine for them to stay ahead of the lockfile until an important change is dropped.

What’s key is that when changes are made to the lockfile, everyone knows about them and updates the necessary packages. To do this you put capusle::whinge() somewhere in the first couple of lines of your project. It produces output like this:

> capsule::whinge()

Warning message:

In capsule::whinge() :

[{capsule} whinge] Your R library packages are behind the lockfile. Use capsule::dev_mirror_lockfile to upgrade.

Oh but people will ignore the warning! Probably. So you can also do this, which is how my team does it:

> capsule::whinge(stop)

Error in capsule::whinge(stop) :

[{capsule} whinge] Your R library packages are behind the lockfile. Use capsule::dev_mirror_lockfile to upgrade.

Now it’s an error. If you’re missing any packages in the lockfile, or you’re behind the lockfile versions the project doesn’t run.

If you get this error you have a one-liner to bring yourself up to the lockfile: capsule::dev_mirror_lockfile() which will make sure you every package in your library is at least the lockfile version. Importantly packages are never downgraded. So that bleeding edge {ggplot2} person can still get those Up To Date endorphins.

I am shocked by how well this workflow works. There’s a possibility of someone using a new package version with breaking changes and forgetting to update the lockfile - and I was fully expecting this to be a big problem… but it just wasn’t. The reason I think it works is that there’s no production environment in the mix here. This is an early-stage development workflow. You’re all constantly updating and running the project, so if it breaks it gets picked up very quickly. There are only ever a few commits you need to look at to see what changed, or a quick question to your teammate who will then slap their forehead and call capsule::capshot() on their machine, commit it, and away you all go.

Finally, we’ve never felt motivated to do this, but you can tighten things up a lot with something like this:

capulse::whinge(stop)

capsule::capshot()

If all your package versions meet or exceed the lockfile, proceed immediately to creating a new lockfile, then run the project. This was actually why I created capsule::capshot(), it’s designed as a very fast ‘in-pipeline’ lockfile creator.

Conclusion

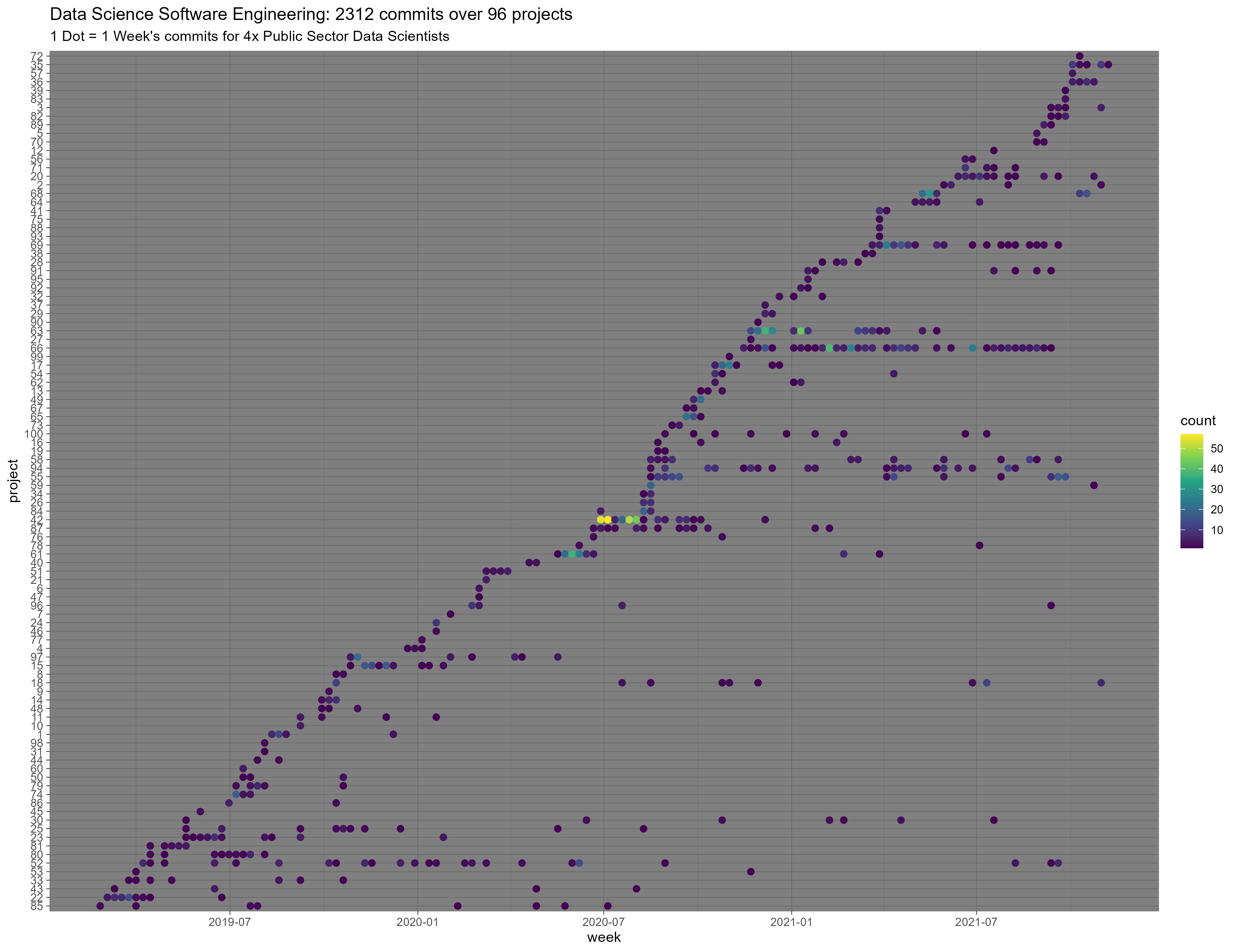

{capsule} is a package I wrote nearly 3 years ago for my team as our main R package dependency management tool. I was always hesitant to promote it because I wasn’t confident my ideas would translate well outside our team, and I also wasn’t confident I wouldn’t balls up someone’s project and they’d curse me forevermore.

It’s fairly battle-hardened now though. It has a niche little fan base of people who saw it in my {drake} post and liked it. I assume a lot of this is due to fact that there’s just less to it than {renv}. I was fairly stoked to find out it was used in the national COVID modelling pipelines in at least two countries recently. It kind of excels in that fast-paced “need something now, but can’t get all these collaborators up to speed on {renv} workflow” zone.

What I’ve learned over the last couple of years is that there isn’t ‘one workflow that fits all’ in this space. It’s about who is on the team and what that team is doing. Workflows that are standard for software engineers are sometimes hard to sell to analytic or scientific collaborators who have a different relationship with their software tool. As I have said many times in the past: If you want people to do something important, ergonomics is key! Success is much more likely with something that feels like it is “for them” rather than something that is “for someone else but we just have to live with it”. And I’m not saying I have the answer for your team, but it might be worth a look if {renv} isn’t clicking.

Finally {capsule} isn’t exactly a competitor to {renv}. It’s not fair to pitch it like that. It uses some {renv} functions under the hood. It wouldn’t have been possible if Kevin Ushey hadn’t been extremely accomodating in {renv} with some changes that enabled {capsule} to work. I think of it more as a ‘driver’ for {renv} that you can switch out at any time for the standard {renv} experience.